Advertisement

Google has officially expanded its open model lineup with the release of Gemma 2 2B, along with two new tools—ShieldGemma and Gemma Scope. While the company has been clear about its goals of building responsibly developed AI models, this release shows a shift toward models that can be used more flexibly in different settings without cutting corners on oversight or clarity. Here’s a clear look at what’s new and why it matters.

Gemma 2 2B is part of the second-generation Gemma model family, and it’s a much-needed addition for developers looking for a smaller model with reliable performance. Unlike its larger 27B sibling released earlier, the 2B version is optimized for resource-conscious users, especially those who want something manageable for local deployment.

This model works well on devices that aren't loaded with high-end hardware. It runs efficiently on laptops and smaller servers without losing track of quality. Google designed it to be faster during inference and lighter in training needs, which means people working on projects with modest computing power now have a solid option that doesn't need too much tweaking.

Gemma 2 2B was trained on a mix of publicly available and licensed content. Google continues to avoid private or proprietary datasets in these open models, focusing on transparency. The model uses an architecture similar to Gemini, Google’s flagship family, but has been trimmed to suit smaller tasks better.

The tokenizer has also been updated. The new multi-token decoder in Gemma 2 allows better token handling, leading to quicker outputs and more efficient performance when compared to the original Gemma models.

ShieldGemma is a tool for filtering, scoring, and managing responses generated by Gemma models. Google is offering it as a starter method for users to introduce safety layers over open models without needing to develop guardrails from scratch.

How It Works

ShieldGemma evaluates generated outputs and scores them across various safety categories, including toxicity, hate speech, and harmful misinformation. You can configure it to block certain types of responses entirely or just flag them for review. It doesn't just give a binary safe/unsafe signal—it breaks it down so developers can choose what to do with the output.

If you’re integrating Gemma into apps that will be shared with users—such as in education, healthcare, or community platforms—ShieldGemma offers a straightforward layer of safety review. Instead of building your moderation system or plugging in a third-party tool, this gives a native method built specifically with the model's architecture in mind.

ShieldGemma is open-source, hosted on GitHub, and ready to be included in pipelines across different programming setups. Google has packaged it so it can be used as-is or customized depending on project needs.

Gemma Scope is an interpretability tool that helps understand how Gemma models respond to prompts. It's built to help researchers, developers, and even curious users observe and test model behavior.

Gemma Scope goes deeper than most basic prompt testers. You can see how the model reacts to edge cases, visualize how attention layers shift across tokens, and even track how outputs might change when a small word in the prompt is adjusted. This is useful for identifying model biases, weaknesses, and inconsistencies that might otherwise go unnoticed.

What sets Scope apart is its support for live evaluations. You can run scenarios side-by-side and compare results in real time. It's especially helpful when testing model updates or when comparing the same input across different versions of Gemma.

For people new to working with language models, Scope provides visibility into what is often treated as a black box. You can track how the model understands prompts, what influences its tone, and how temperature or top-k settings affect generation. It gives concrete data that helps users feel less in the dark about how these models behave.

With Gemma 2 2B, Google is addressing the gap between powerful AI models and real-world usability. Developers often have to pick between large models that require lots of hardware and smaller ones that miss the mark in performance. Gemma 2 2B avoids that trade-off by offering something you can run locally that still delivers high-quality results.

At the same time, Google isn’t just pushing out another model—they’re providing the tools to manage and study it effectively. ShieldGemma and Gemma Scope show an understanding that models can’t be used in isolation anymore. Whether you're concerned about ethical use or technical soundness, these tools help users stay on track.

The fact that all three—Gemma 2 2B, ShieldGemma, and Gemma Scope—are open and accessible means that independent developers, researchers, and smaller teams aren’t being left behind. You don’t need deep pockets or enterprise-level support just to start experimenting or deploying responsibly.

By combining model performance with safety and interpretability, Google is trying to balance power with control. They’re not just releasing a tool—they're releasing a comprehensive stack that encompasses creation, moderation, and understanding within a single ecosystem.

With this release, Google adds another layer to its AI strategy—smaller models with thoughtful guardrails and transparency tools. Whether you're experimenting, building an app, or analyzing how AI makes decisions, this update has something practical to offer. And the best part? You don't need massive infrastructure or endless configuration to get started. It's straightforward, open, and ready to test in real projects. Hope you find this info worth reading. Stay tuned for more interesting yet helpful guides. These updates demonstrate that useful AI tools don't always require complex requirements. Sometimes, clarity and accessibility are all that are needed to make a model work well in real-world use.

Advertisement

Ready to run powerful AI models locally while ensuring safety and transparency? Discover Gemma 2 2B’s efficient architecture, ShieldGemma’s moderation capabilities, and Gemma Scope’s interpretability tools

Microsoft’s new AI model Muse revolutionizes video game creation by generating gameplay and visuals, empowering developers like never before

Fond out the top AI tools for content creators in 2025 that streamline writing, editing, video production, and SEO. See which tools actually help improve your creative flow without overcomplicating the process

How can Google’s Gemma 3 run on a single TPU or GPU? Discover its features, speed, efficiency and impact on AI scalability.

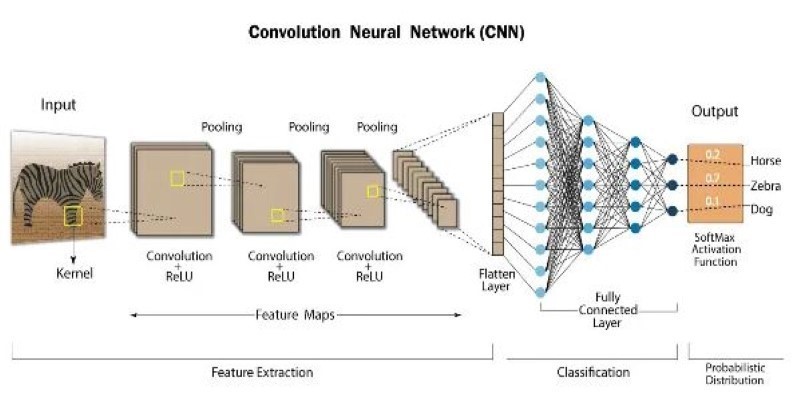

How atrous convolution improves CNNs by expanding the receptive field without losing resolution. Ideal for tasks like semantic segmentation and medical imaging

How using Xet on the Hub simplifies code and data collaboration. Learn how this tool improves workflows with reliable data versioning and shared access

How to use the ternary operator in Python with 10 practical examples. Improve your code with clean, one-line Python conditional expressions that are simple and effective

How RLHF is evolving and why putting reinforcement learning back at its core could shape the next generation of adaptive, human-aligned AI systems

What is Auto-GPT and how is it different from ChatGPT? Learn how Auto-GPT works, what sets it apart, and why it matters for the future of AI automation

Explore the top 10 large language models on Hugging Face, from LLaMA 2 to Mixtral, built for real-world tasks. Compare performance, size, and use cases across top open-source LLMs

How Fireworks.ai changes AI deployment with faster and simpler model hosting. Now available on the Hub, it helps developers scale large language models effortlessly

Discover three new serverless inference providers—Hyperbolic, Nebius AI Studio, and Novita—each offering flexible, efficient, and scalable serverless AI deployment options tailored for modern machine learning workflows