Advertisement

The world of AI isn't short of new names, but a new entrant often steps in and offers something different. Fireworks.ai has just joined the Hub, and for developers, researchers, and companies working with machine learning, this addition could make day-to-day experimentation and scale far less frustrating. Fireworks.ai focuses on reducing the weight of infrastructure, making model hosting and deployment faster and simpler. It doesn't try to dazzle with buzzwords; instead, it targets real pain points—slow load times, scaling limits, expensive GPU bills—and does something about them.

This article examines Fireworks.ai's unique capabilities, how it differs from existing services, and why its arrival on the Hub could transform how models are served, tested, and used across projects.

AI deployment can be clunky. Even after fine-tuning a model, getting it into the world quickly and reliably still takes effort. That's where Fireworks.ai makes its pitch: deploy large language models without wrestling with slow boot times or worrying about infrastructure costs stacking up.

At its core, Fireworks.ai is a hosting platform optimized for large models. It handles the infrastructure automatically and focuses heavily on delivering low-latency responses. That means models don't need to "warm up" each time they're called. Whether you're building a chatbot, a document summarizer, or a search assistant, performance stays consistent and fast.

Another strong feature is how it manages scale. When moving from a working prototype to a public app, many projects hit a wall. Fireworks.ai’s architecture makes it easier to go from small experiments to production-level services without rewriting large backend

parts.

This solves a daily problem for developers. Rather than spinning up GPU-heavy instances, monitoring usage, and constantly reconfiguring autoscaling rules, they can upload their model and call it via API—simple, clean, and efficient.

One of the more refreshing aspects of Fireworks.ai is its direct approach to pricing. AI infrastructure tends to be vague, with many footnotes and surprise overages. Fireworks.ai takes a more open route. Pricing is based on usage and actual compute time, not obscure licensing models or hidden bandwidth limits. This matters to smaller teams or solo developers who want to control costs without sacrificing performance.

But pricing alone isn't enough. The performance has to be solid. And that's where Fireworks.ai earns its space. It offers fine-tuned versions of major models optimized for inference speed and memory footprint. This is crucial for businesses that require real-time interaction, such as chatbots or AI copilots, where even a half-second delay can significantly impact the user experience.

It also supports high-availability clusters for production-scale applications, ensuring minimal downtime and consistent throughput even under heavier loads. That reliability is often harder to get with do-it-yourself solutions that run on shared cloud infrastructure.

Fireworks.ai doesn’t require heavy integrations, either. It plays well with existing ML libraries and APIs, which reduces the time it takes to onboard and test things. You can plug in your Hugging Face models, set up authentication, and hit the endpoint immediately.

Many services offer model hosting, but Fireworks.ai stands out because of its assumptions. First, it assumes people want to iterate fast, so it's built to remove slow setup and test cycles. Most existing AI hosting tools need some setup time or constant tuning. Here, the goal is instant access with minimal tweaks.

Second, Fireworks.ai is not just another wrapper around open-source models. It adds meaningful optimizations under the hood. Its inference engine has been tuned to handle larger input sizes without timing out, a common problem with hosted models on other platforms.

Third, and most significantly, it allows for public and private models. That means you can bring your model, host it securely, and still benefit from the speed and infrastructure advantages. Whether you're using open-source LLMs or proprietary, fine-tuned models, the hosting environment remains the same.

Ultimately, it prioritizes transparency over marketing. The documentation is clear, the onboarding is easy, and you don't need a sales call to get started. It's built for people who want to ship products, not sit through pitch decks.

Adding Fireworks.ai to the Hub is a win for developers and researchers already using the ecosystem. This service offers a clear path forward if you're building something and need fast inference without jumping through hoops.

The integration with the Hub enables a direct connection to Fireworks. AI-hosted models as part of your pipeline without exporting, reconfiguring, or adapting your formats. You get fast endpoints, support for high-traffic loads, and tools to monitor usage—all in one place.

For those working on commercial applications, it's a strong alternative to other cloud providers that often require more setup and charge for idle time. It opens a new path for open-source collaborators to share models with guaranteed performance and no major hosting burden.

Fireworks.ai also appears to be invested in community growth, offering free tiers and discounts for early-stage developers. This fits well with the Hub's open, share-first culture, where tools and models are meant to be accessible and flexible.

Fireworks.ai joins the Hub with a practical approach to model deployment that cuts through complexity. It offers fast, scalable hosting for large models without forcing developers to manage heavy infrastructure or unpredictable costs. Its clear documentation, consistent performance, and easy integration make it suitable for individual projects and commercial-scale applications. Support for custom and public models, along with pricing that reflects real usage, gives teams more control without added friction. This addition to the Hub creates more opportunities for efficient AI development and model sharing, helping users focus on building instead of wrestling with backend logistics. With low-latency inference, simple setup, and reliable uptime, it’s built for modern AI workflows.

Advertisement

How the Open Leaderboard for Hebrew LLMs is transforming the evaluation of Hebrew language models with open benchmarks, real-world tasks, and transparent metrics

What is Auto-GPT and how is it different from ChatGPT? Learn how Auto-GPT works, what sets it apart, and why it matters for the future of AI automation

Microsoft’s new AI model Muse revolutionizes video game creation by generating gameplay and visuals, empowering developers like never before

How the world’s first AI-powered restaurant in California is changing how meals are ordered, cooked, and served—with robotics, automation, and zero human error

Learn the regulatory impact of Google and Meta antitrust lawsuits and what it means for the future of tech and innovation.

Ready to run powerful AI models locally while ensuring safety and transparency? Discover Gemma 2 2B’s efficient architecture, ShieldGemma’s moderation capabilities, and Gemma Scope’s interpretability tools

Think Bash loops are hard? Learn how the simple for loop can help you rename files, monitor servers, or automate routine tasks—all without complex scripting

The paperclip maximizer problem shows how an AI system can become harmful when its goals are misaligned with human values. Learn how this idea influences today’s AI alignment efforts

Anthropic secures $3.5 billion in funding to compete in AI with Claude, challenging OpenAI and Google in enterprise AI

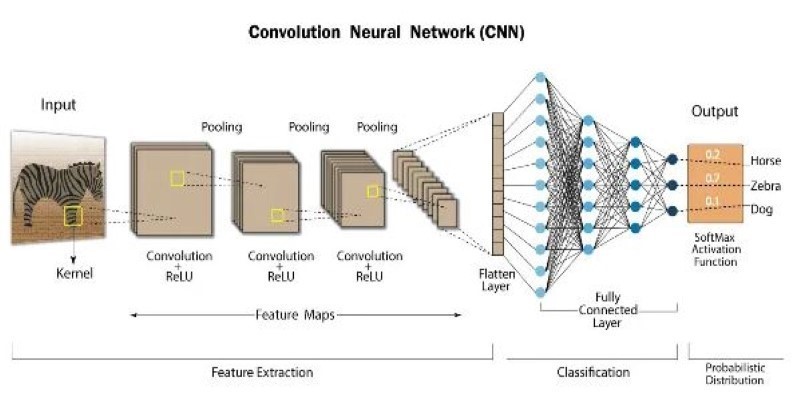

How atrous convolution improves CNNs by expanding the receptive field without losing resolution. Ideal for tasks like semantic segmentation and medical imaging

Explore the top 10 large language models on Hugging Face, from LLaMA 2 to Mixtral, built for real-world tasks. Compare performance, size, and use cases across top open-source LLMs

How PaliGemma 2, Google's latest innovation in vision language models, is transforming AI by combining image understanding with natural language in an open and efficient framework