Advertisement

Google has spent years trying to bring visual and language understanding into one model. The early versions were clunky—capable of describing basic images or answering direct questions but missing context. That’s changing with PaliGemma 2. This new generation of vision language models doesn’t just match images with words; it connects both in a way that makes more sense.

It understands details, follows instructions, and reasons visually. Google’s goal is simple: to create models that feel more useful, more flexible, and easier to work with. PaliGemma 2 moves a step closer to that idea.

PaliGemma 2 is a new family of open vision language models (VLMs) from Google. These models are built to understand both text and images at the same time, with strong results in tasks that need visual reasoning. At the technical level, PaliGemma 2 pairs an image encoder with a language decoder. The encoder, based on SigLIP, handles image recognition and structure, while the decoder uses the Gemma model to generate or interpret text.

The models are offered in two main sizes—3 billion and 7 billion parameters. Despite being large, they’re optimized to run well on standard hardware. That makes it easier for developers and researchers to test and build without heavy resources.

PaliGemma 2 is also open-weight. This means the actual model files are available to the public. Developers and researchers can use, test, or fine-tune them without needing paid access. That openness supports research, speeds up testing, and invites a wider range of people to work with the technology.

The image encoder is powered by SigLIP, a contrastive vision model that’s better at picking up visual detail. Instead of just learning to match pictures with labels, it learns from patterns and structures using sigmoid loss. This helps the model handle subtle differences in images—like posture, expression, or background elements—and create a deeper understanding.

Once the image is processed, the language decoder steps in. It can describe the scene, answer questions, or follow instructions tied to what it sees. It’s not just repeating patterns; it combines image data and language input to form useful responses.

A key part of PaliGemma 2’s performance comes from instruction tuning. The model was trained using prompts designed to simulate real-world requests. This makes it better at understanding what users want, whether the input is a sentence, a command, or a question. It’s trained on a mix of real and synthetic data to give it a broad knowledge base.

Benchmarks show that PaliGemma 2 performs strongly in tasks like COCO image captioning and VQAv2 (visual question answering). It handles zero-shot and few-shot tasks well, showing that it generalizes across use cases. While it doesn’t beat the biggest proprietary models in every category, it offers a strong balance of performance and efficiency—especially since it’s fully accessible.

The use cases for PaliGemma 2 go well beyond captioning pictures. It’s designed to follow instructions and reason across both vision and language. That opens the door for developers building applications in areas like accessibility, education, document processing, and search.

Inaccessibility models like this can help describe the world to people who are visually impaired. Because PaliGemma 2 captures fine details and follows natural prompts, it can provide descriptions that are more accurate and useful. This can support voice-based tools that give real-time information from a camera feed.

In education, learning tools often rely on visuals—such as diagrams, photos, and graphs. PaliGemma 2 can explain those visuals in different tones based on the user, whether that’s a child, a teen, or a teacher. It doesn’t just read out facts—it can adjust its response based on context and instruction.

Document automation is another practical space. Many businesses still rely on forms and scanned documents. PaliGemma 2 can help identify parts of a form, extract useful information, or even explain what's on the page. Because it can run on modest hardware, it’s usable in environments where cloud access might not be allowed.

The fact that it’s open-weight makes it a strong choice for researchers. Instead of working around limitations or paywalls, they can test and customize the model directly. It’s easier to study its behavior, improve safety features, or explore new training methods when you can see what’s under the hood.

PaliGemma 2 is part of a shift in artificial intelligence. Models are moving from text-only understanding to grounded perception—connecting vision, language, and logic. That helps them handle real-world tasks better because they're not just guessing from words—they’re looking at actual content and responding to it.

As AI systems become more common in everyday tech, the ability to “see” changes what machines can help with, from reading menus to analyzing maps or interpreting body language. It also raises challenges, like handling biased images or avoiding misinterpretation.

Google says it built safety layers into PaliGemma 2, including filters and limitations to reduce harm. Like all models, it isn’t perfect. It can make odd predictions or miss context, especially with unusual images. But by keeping it open, Google allows others to test and improve it. That openness supports growth and trust.

The model weights and tools are available under a generous license. Setup is simple enough for developers or researchers with basic machine learning experience. Projects don’t have to wait—they can start now.

PaliGemma 2 isn’t the largest model out there. It offers a good balance between speed, quality, and usability.

PaliGemma 2 is a step forward in making vision-language models more accessible, more useful, and easier to work with. It understands both words and images and connects them in ways that feel practical and real. For developers, researchers, and teams looking for an open model that can see and talk, this one delivers. It’s not trying to be flashy. It’s built to work—and it does that well. With PaliGemma 2, Google isn’t just pushing the field forward; it’s giving more people the tools to do the same. That makes a difference.

Advertisement

What is Auto-GPT and how is it different from ChatGPT? Learn how Auto-GPT works, what sets it apart, and why it matters for the future of AI automation

Need to save Python objects between runs? Learn how the pickle module serializes and restores data—ideal for caching, model storage, or session persistence in Python-only projects

A clear and practical guide for open source developers to understand how the EU AI Act affects their work, responsibilities, and future projects

Discover three new serverless inference providers—Hyperbolic, Nebius AI Studio, and Novita—each offering flexible, efficient, and scalable serverless AI deployment options tailored for modern machine learning workflows

How the Open Leaderboard for Hebrew LLMs is transforming the evaluation of Hebrew language models with open benchmarks, real-world tasks, and transparent metrics

How the world’s first AI-powered restaurant in California is changing how meals are ordered, cooked, and served—with robotics, automation, and zero human error

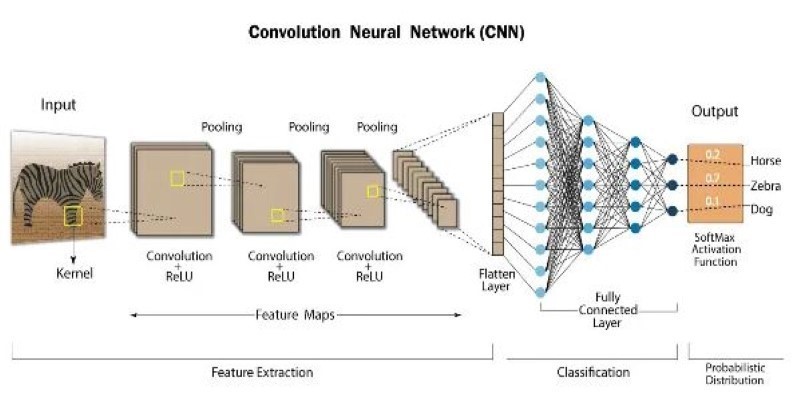

How atrous convolution improves CNNs by expanding the receptive field without losing resolution. Ideal for tasks like semantic segmentation and medical imaging

Explore the best AI Reels Generators for Instagram in 2025 that simplify editing and help create high-quality videos fast. Ideal for content creators of all levels

Getting the ChatGPT error in body stream message? Learn what causes it and how to fix it with 7 practical ChatGPT troubleshooting methods that actually work

Discover the next generation of language models that now serve as a true replacement for BERT. Learn how transformer-based alternatives like T5, DeBERTa, and GPT-3 are changing the future of natural language processing

Microsoft’s new AI model Muse revolutionizes video game creation by generating gameplay and visuals, empowering developers like never before

How RLHF is evolving and why putting reinforcement learning back at its core could shape the next generation of adaptive, human-aligned AI systems