Advertisement

It’s easy to forget that the "RL" in Reinforcement Learning with Human Feedback was once the centerpiece of the method. Over time, though, its role has narrowed. Today’s AI systems still use RL in name, but not always in substance. In large language models, reinforcement learning often shows up only in the final phase of training, used more like a fine-tuning tool than a learning process. This raises a key question: What does it really mean to put reinforcement learning back into RLHF? And what could we gain if we did?

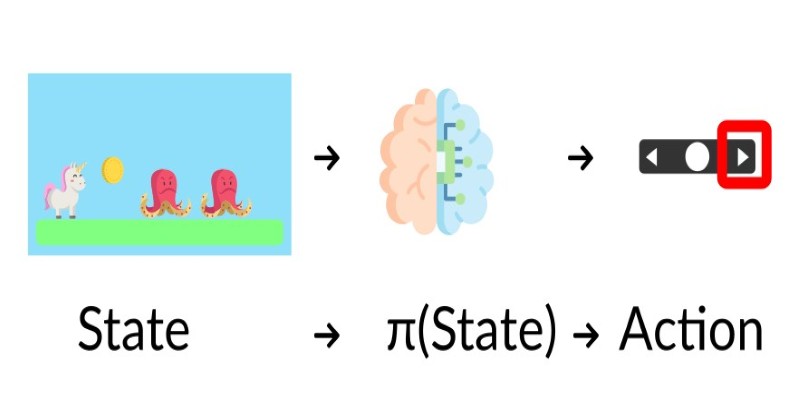

The current RLHF setup usually involves three main steps. First, a base model is trained on huge datasets using supervised learning. Next, a reward model is created by having humans rank multiple model outputs. Finally, reinforcement learning is applied to optimize responses that score higher according to the reward model.

That last phase—reinforcement learning—is often limited in scope. The model is trained to be more helpful, less risky, or more aligned with guidelines, but not much beyond that. There is little room for learning from long-term feedback or complex interactions. Instead of the kind of adaptive behaviour RL is known for, what we get is more of a focused clean-up job.

Classic RL is about learning through exploration and delayed rewards. It's used to teach agents how to navigate complex environments, make trade-offs, and develop long-term strategies. In many AI systems labeled as using RLHF, that depth is missing. The process is streamlined to get quick improvements based on simple, immediate feedback rather than long-term behavioural learning.

Putting RL back into RLHF doesn’t mean scrapping what works. It means building on the foundation to allow for deeper, more meaningful learning. True reinforcement learning brings strengths that are often left on the table: exploration, policy development, and learning from extended interactions.

Take conversation models as an example. Most are optimized for short, one-off exchanges. But real conversations are messy and unpredictable. If RL were used more thoroughly, a model could learn to maintain context over time, adapt to tone, or respond in ways that improve the overall experience, not just the current reply.

The same goes for instruction-following. Instead of always producing the same kind of output for a prompt, a more RL-integrated system could learn from trial and error—experimenting with how people respond to its outputs, adjusting its approach, and refining its behaviour.

This shift focuses on learning behaviours, not just single outputs. The model doesn't just ask, "Did this one response get a good score?" It asks, "How do different choices lead to better performance across time and tasks?" This opens the door to much more adaptive AI systems—ones that grow based on patterns, not presets.

So why hasn’t RL played a bigger role in RLHF yet? The biggest reason is that it’s hard to do well. In language systems, rewards are often vague. Unlike in games, where the score is clear, human feedback on text is messy. People have different opinions, and the context often changes.

Another problem is that RL is hard to stabilize. Algorithms like PPO are popular because they’re relatively safe, but they still need careful tuning. The training process can be unpredictable, and there’s always the risk of the model becoming less coherent or more biased if the signals aren’t balanced.

There’s also the issue of cost. Full-scale RL training takes time, computing resources, and lots of human feedback. Most companies simplify the process to save time and reduce expense, turning RL into more of a short feedback loop rather than an ongoing learning system.

And human feedback itself is a bottleneck. It’s one thing to say, "This output is better," but it's another to guide a model through learning how to handle unclear prompts, changing goals, or conflicting preferences. That kind of feedback is richer—but it's harder to collect and interpret.

Reinforcement learning has the potential to make AI systems more flexible and aligned with human goals. Currently, much of what is labelled RLHF produces models that sound good but don't actually adapt or improve with use. They rely on predefined signals and fixed datasets, which limits their ability to grow beyond their training.

If we give reinforcement learning a fuller role, AI systems could become more responsive to real-world interaction. They could learn how people actually use them, improve based on long-term feedback, and shift their behaviour when something isn't working. That kind of learning isn't easy, but it's the kind that leads to progress.

Instead of training models to match one-off examples, we could train them to develop strategies—to make better decisions over time, not just better guesses. That's the real value of reinforcement learning: not just improving accuracy but building systems that can think, adjust, and improve with each use.

Right now, most large models rely heavily on scale and data volume. Adding richer RL doesn’t mean abandoning that, but it does offer a path toward smarter, more capable systems that grow beyond their initial training.

RLHF was meant to blend human preferences with reinforcement learning, offering a way for AI to learn beyond raw data. However, in many implementations today, the "RL" component is reduced to a final tweak rather than an ongoing learning process. Bringing it back means allowing models to grow from experience, handle uncertainty, and make smarter decisions over time. That shift won't be easy—it demands better feedback systems, more exploration, deeper evaluation, and a move away from short-term optimization. But it's a necessary step if we want AI to become more adaptable, aligned, and genuinely useful beyond carefully curated prompts or scripted scenarios.

Advertisement

Explore the best AI Reels Generators for Instagram in 2025 that simplify editing and help create high-quality videos fast. Ideal for content creators of all levels

Discover the next generation of language models that now serve as a true replacement for BERT. Learn how transformer-based alternatives like T5, DeBERTa, and GPT-3 are changing the future of natural language processing

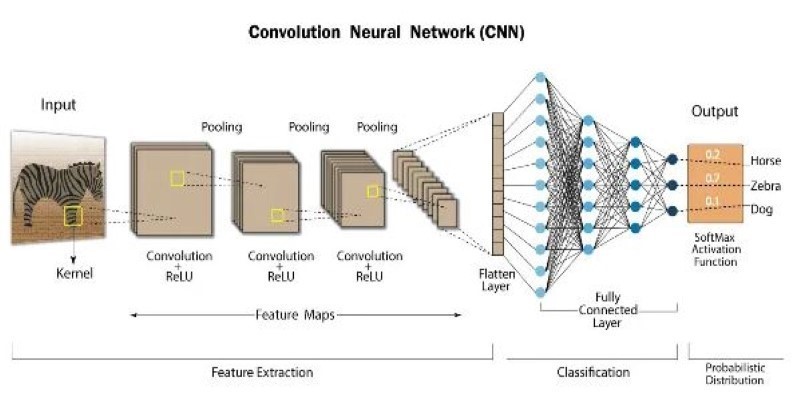

How atrous convolution improves CNNs by expanding the receptive field without losing resolution. Ideal for tasks like semantic segmentation and medical imaging

How Fireworks.ai changes AI deployment with faster and simpler model hosting. Now available on the Hub, it helps developers scale large language models effortlessly

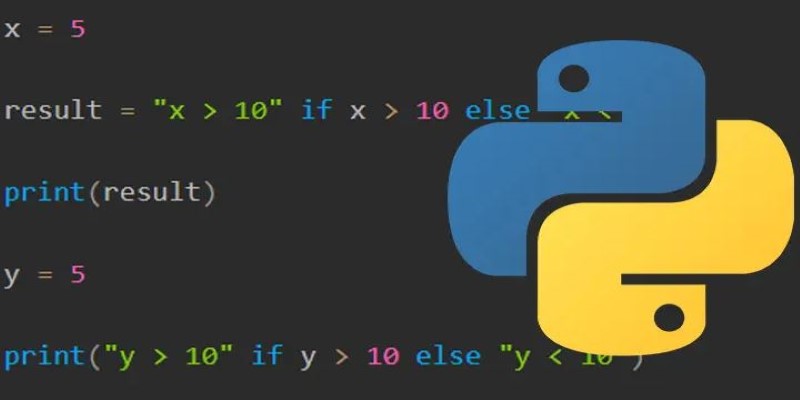

How to use the ternary operator in Python with 10 practical examples. Improve your code with clean, one-line Python conditional expressions that are simple and effective

Fond out the top AI tools for content creators in 2025 that streamline writing, editing, video production, and SEO. See which tools actually help improve your creative flow without overcomplicating the process

How the Open Leaderboard for Hebrew LLMs is transforming the evaluation of Hebrew language models with open benchmarks, real-world tasks, and transparent metrics

Learn the regulatory impact of Google and Meta antitrust lawsuits and what it means for the future of tech and innovation.

How RLHF is evolving and why putting reinforcement learning back at its core could shape the next generation of adaptive, human-aligned AI systems

What is Auto-GPT and how is it different from ChatGPT? Learn how Auto-GPT works, what sets it apart, and why it matters for the future of AI automation

How AutoGPT is being used in 2025 to automate tasks across support, coding, content, finance, and more. These top use cases show real results, not hype

A clear and practical guide for open source developers to understand how the EU AI Act affects their work, responsibilities, and future projects