Advertisement

BERT changed how machines understand language. When it arrived, it raised the bar in natural language processing and quickly became a standard across many systems. From online searches to translation tools, BERT was integrated into countless applications. It introduced deep bidirectional learning, offering a more accurate understanding of sentence structure.

But while it was groundbreaking at the time, it also had clear limits—slow training times, high resource demands, and poor performance with long texts. Now, new models have emerged that outperform BERT on most fronts. These aren’t just upgrades—they’re replacements, signaling a shift in the foundation of language modeling.

BERT’s architecture was built around the idea of bidirectional attention. Instead of reading sentences from left to right or right to left, BERT looked at all the words at once. This helped it learn how context shapes meaning. A word like “bank” could refer to a riverbank or a financial institution, and BERT could use nearby words to figure that out.

Its popularity exploded after Google adopted it for its search engine. Open-source versions soon followed, and the research community began building on its base. Models like RoBERTa refined the training process, while others, such as DistilBERT, streamlined it for faster use. BERT's format has become the go-to approach for language tasks, including classification, sentiment detection, and question answering.

Still, it had drawbacks. The pre-training task—masked language modeling—enabled BERT to learn to predict unseen words in sentences. This worked effectively for grammar and structure learning but didn't replicate the way the language is really used. It wasn't capable of producing text or performing tasks such as summarization spontaneously. This restricted its applicability. Dealing with lengthy documents was another problem. BERT had a fixed input length, which put it at a disadvantage for paragraphs or multi-page inputs.

These challenges became more obvious as users pushed for models that could handle broader contexts with better efficiency. As tasks grew more complex, the need for a true replacement became clearer.

Several models have risen to take BERT's place, offering more flexibility and performance. One of the most notable is T5, a model that reframes all language tasks as text-to-text. Instead of having separate setups for classification, translation, or summarization, T5 treats all tasks as problems of generating text based on input. This approach makes it adaptable and easier to use across many applications.

Another major advancement is the GPT series. Although GPT-2 and GPT-3 were primarily designed for generating text, they also proved strong in understanding tasks. These models use a unidirectional approach, predicting the next word in a sequence. While this may sound simple, it leads to profound language understanding when applied on a large scale. GPT-3's ability to handle various tasks with little instruction set a new benchmark.

DeBERTa is a closer cousin to BERT, but it brings improvements in how it separates content and positional information. Instead of merging the two early, it processes them more cleanly, which boosts performance in reading comprehension and classification. Despite being similar in size to BERT, DeBERTa achieves higher accuracy on many standard tests.

These models not only deliver better results but are also more aligned with how language is used in real settings. They can handle longer sequences, respond with text, and adapt to varied inputs without needing custom layers for each task.

New models succeed where BERT struggles. One key improvement is in how they are trained. Instead of predicting masked words, they learn to produce full sequences. This more closely reflects human language use, enabling better handling of tasks such as summarizing or generating responses.

T5 benefits from its uniform approach—everything is a text input and a text output. That reduces the need for custom architecture and makes the model more consistent. GPT models excel at producing fluent, human-like text and can be used in systems that require conversational replies or content generation.

Memory handling is another area where these newer models shine. BERT has a fixed input size, which limits its ability to process large blocks of text. Newer architectures can process longer inputs by using efficient attention patterns or sparse mechanisms. Longformer and BigBird introduced methods for handling lengthy documents, and their ideas have been adopted by more recent models.

Smaller versions of these newer models are also more practical. T5-small and GPT-2 medium offer strong performance with minimal resource demands. They can be used in devices or apps that require quick responses without needing powerful servers.

These models are designed for real-world needs. They understand instructions more effectively, generate usable content, and adapt to a wider range of tasks. Their structure is more flexible, and their results are more reliable, which has helped them become the preferred option over BERT.

BERT’s influence will remain, but its era as the default model is over. T5, DeBERTa, and GPT-3 show that we now have options that are better suited to today’s challenges. They process longer texts, respond more naturally, and need less task-specific tuning. As models continue to improve, we’re moving toward systems that can understand and respond to language with more depth and less effort.

Newer models are also being built with transparency in mind. Open-source projects like LLaMA and Mistral provide high performance without relying on massive infrastructure. These community-driven efforts match or exceed the results of older models while being easier to inspect and adapt.

BERT now serves as a milestone in AI history—a testament to the significant progress that has been made. But it's no longer the best tool for modern systems. The field has moved forward, and the models taking BERT’s place are not just improvements—they’re built on new ideas, more suited to how we use language today.

BERT changed how machines understand language, but it's no longer leading the way. Models like T5, GPT-3, and DeBERTa now outperform it in flexibility, speed, and practical use. They manage longer inputs, adjust to tasks easily, and generate more natural responses. While BERT paved the way, today’s models are built for real-world demands. The shift has happened—BERT has been replaced, and the new standard in language modeling is already in motion.

Advertisement

Multimodal models combine text, images, and audio into a shared representation, enabling AI to understand complex tasks like image captioning and alignment with more accuracy and flexibility

How AutoGPT is being used in 2025 to automate tasks across support, coding, content, finance, and more. These top use cases show real results, not hype

Think Bash loops are hard? Learn how the simple for loop can help you rename files, monitor servers, or automate routine tasks—all without complex scripting

Need to save Python objects between runs? Learn how the pickle module serializes and restores data—ideal for caching, model storage, or session persistence in Python-only projects

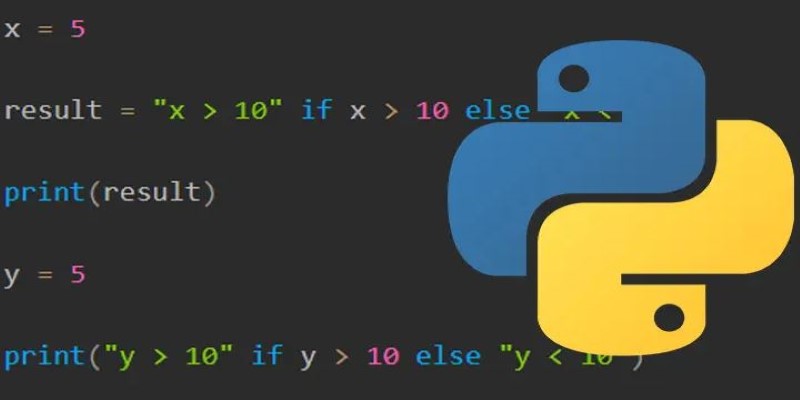

How to use the ternary operator in Python with 10 practical examples. Improve your code with clean, one-line Python conditional expressions that are simple and effective

The paperclip maximizer problem shows how an AI system can become harmful when its goals are misaligned with human values. Learn how this idea influences today’s AI alignment efforts

How the world’s first AI-powered restaurant in California is changing how meals are ordered, cooked, and served—with robotics, automation, and zero human error

Explore the best AI Reels Generators for Instagram in 2025 that simplify editing and help create high-quality videos fast. Ideal for content creators of all levels

What is Auto-GPT and how is it different from ChatGPT? Learn how Auto-GPT works, what sets it apart, and why it matters for the future of AI automation

How Fireworks.ai changes AI deployment with faster and simpler model hosting. Now available on the Hub, it helps developers scale large language models effortlessly

Getting the ChatGPT error in body stream message? Learn what causes it and how to fix it with 7 practical ChatGPT troubleshooting methods that actually work

Fond out the top AI tools for content creators in 2025 that streamline writing, editing, video production, and SEO. See which tools actually help improve your creative flow without overcomplicating the process