Advertisement

Imagine explaining a photo without using words or describing a song without hearing it. That's how early AI systems worked—isolated, one skill at a time. But that's not how we think. We process sound, images, and language all at once. Multimodal models are changing the game by teaching machines to do the same.

Instead of just reading text or scanning images, these models combine different input types to better understand the context. It's a shift from narrow tasks to broader understanding, making AI more adaptable, accurate, and useful in more natural ways.

Multimodal models are built to handle more than one type of input—text, images, audio, and even sensor data. But they don't just process each stream on its own. What makes these models stand out is their ability to blend different inputs and find meaning across them. Show the model a photo and ask a question about it, and it won't treat the image and text separately. It reads them together, understands the connection, and responds with context.

This merging happens through what's called fusion. Some models mix inputs early, right after they come in. Others keep them separate until the final step. There’s also a middle-ground approach that blends them halfway through. The choice depends on how the data types relate to each other and what the task needs.

The real strength of multimodal learning lies in linking things across formats. If a model sees a dog in a photo and reads the word “dog,” it should recognize they mean the same thing. That’s where shared representation comes in. It turns different inputs into a common format—usually vectors—so the model can measure how closely they relate. Whether it’s text or pixels, everything ends up in the same space, letting the model “understand” their connection.

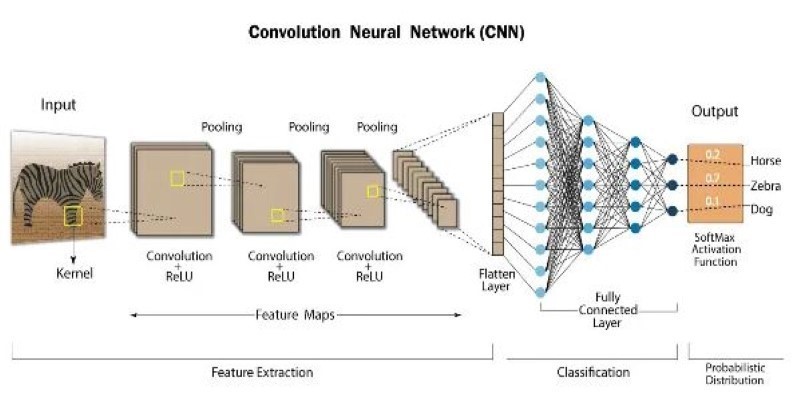

Each type of input is handled by its processor. Text passes through natural language models, images pass through vision systems, and audio might use wave-based methods or spectrograms. These separate results are then aligned to work together.

Alignment means the model understands how inputs relate. For example, it needs to link a word in a caption to a specific object in an image. It does this by placing all data into the same vector space. This shared space lets the model measure how closely inputs are connected. If two items land near each other in this space, the system treats them as related.

Embeddings play a major role. Everything is converted into a dense vector, which allows the system to compare things across types. This method is often trained using contrastive learning, where correct matches are pulled closer together, and mismatches are pushed apart.

Good training data is key. These models need matched inputs: videos with transcripts, images with captions, or audio with text. The better the match, the stronger the alignment the model learns. Without clear connections, the model struggles to combine inputs meaningfully.

We're already seeing multimodal models in action. One example is image captioning—where a model writes a sentence based on what's in a picture. Other tools can turn text into images or answer questions about what's in a video. These systems work because they understand how text and visuals relate.

In medicine, multimodal models are trained to link medical records, scans, and voice notes. This gives doctors a fuller picture and could speed up diagnosis. In customer service, AI tools are learning to combine speech, photos, and written messages to give faster answers. In education, multimodal systems can respond to voice, show relevant diagrams, and explain answers.

But there are challenges. Getting good training data takes time and resources. You need content where each part—text, image, audio—is aligned. A mistake in one part can affect the whole result. Another issue is bias. If the model trains on biased captions, it might carry those into its answers. This is a known risk with all AI, but it's harder to fix when multiple data types are involved.

It’s also hard to know how the model made a decision. With so many inputs, it’s tough to tell if a wrong answer came from the text, the image, or how they were mixed. This lack of clarity makes debugging more difficult.

Multimodal models are helping AI understand the context better. Instead of needing a separate tool for each task, a single model can work across formats. This means it can shift between describing images, writing text, or answering questions about videos. That kind of flexibility is rare in older AI models.

We're seeing more tools to create images from descriptions, explain visuals with words, or take in both text and speech. These systems aren't perfect, but they open new ways to build smart, useful tools. In the future, we may see AI that watches and listens like a human, simultaneously responding to input across several formats.

In design, teams may work with AI that reads what they type and sees the images they create, giving feedback that reflects both. In research, models could read charts, understand written findings, and listen to lab notes. The data's shared representation would let the model spot patterns across sources.

The potential is big, but we still need better training methods, cleaner data, and tools to explain these systems thinking. As more tasks rely on AI, these improvements will matter more.

Multimodal models are helping machines act less like calculators and more like thoughtful helpers. Instead of focusing on one kind of input, they combine text, visuals, and sound to make decisions. This makes them useful in healthcare, design, and customer service. But with that power come new problems—bias, data quality, and lack of transparency. These aren’t easy fixes, but the progress shows real promise. As more industries adopt these systems, multimodal learning will likely play a bigger role in how AI fits into our lives. Machines are learning to "see" and "hear," changing how we work with them.

Advertisement

Anthropic secures $3.5 billion in funding to compete in AI with Claude, challenging OpenAI and Google in enterprise AI

How atrous convolution improves CNNs by expanding the receptive field without losing resolution. Ideal for tasks like semantic segmentation and medical imaging

How using Xet on the Hub simplifies code and data collaboration. Learn how this tool improves workflows with reliable data versioning and shared access

Explore the best AI Reels Generators for Instagram in 2025 that simplify editing and help create high-quality videos fast. Ideal for content creators of all levels

Discover three new serverless inference providers—Hyperbolic, Nebius AI Studio, and Novita—each offering flexible, efficient, and scalable serverless AI deployment options tailored for modern machine learning workflows

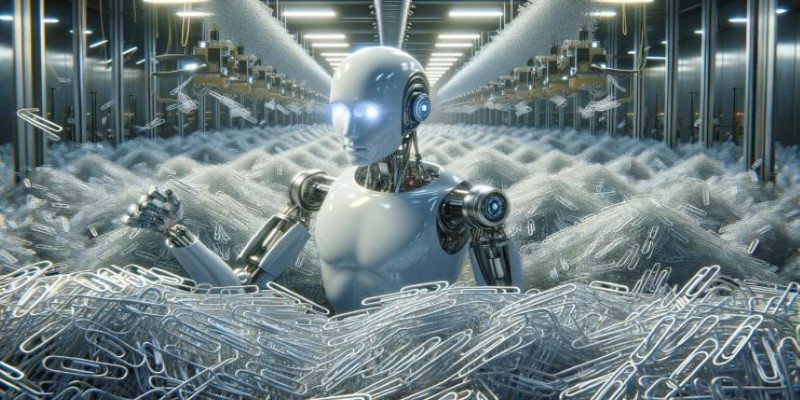

The paperclip maximizer problem shows how an AI system can become harmful when its goals are misaligned with human values. Learn how this idea influences today’s AI alignment efforts

Discover the next generation of language models that now serve as a true replacement for BERT. Learn how transformer-based alternatives like T5, DeBERTa, and GPT-3 are changing the future of natural language processing

Ready to run powerful AI models locally while ensuring safety and transparency? Discover Gemma 2 2B’s efficient architecture, ShieldGemma’s moderation capabilities, and Gemma Scope’s interpretability tools

How Fireworks.ai changes AI deployment with faster and simpler model hosting. Now available on the Hub, it helps developers scale large language models effortlessly

Microsoft’s new AI model Muse revolutionizes video game creation by generating gameplay and visuals, empowering developers like never before

Learn how to run privacy-preserving inferences using Hugging Face Endpoints to protect sensitive data while still leveraging powerful AI models for real-world applications

Think Bash loops are hard? Learn how the simple for loop can help you rename files, monitor servers, or automate routine tasks—all without complex scripting