Advertisement

Hebrew is a language with deep cultural roots and a growing digital presence, yet it often gets sidelined in the world of large language models. Most AI tools are built with English in mind, leaving Hebrew speakers with models that miss the mark. That’s starting to change. The new Open Leaderboard for Hebrew LLMs offers a transparent way to test and compare Hebrew-focused models on real tasks that matter. It's not just about scores—it’s about building smarter, more accurate tools for Hebrew communication. This initiative finally gives Hebrew its own space in the AI world, and the timing couldn’t be better.

Languages are complex, and Hebrew is no exception. It has a unique grammar system, distinct morphology, a right-to-left script, and a vocabulary that doesn't always translate easily into other languages. Most general-purpose LLMs—like GPT or LLaMA—are trained primarily in English and a handful of other high-resource languages. As a result, their performance in Hebrew tends to lag, sometimes producing responses that are grammatically awkward or semantically unclear.

The Open Leaderboard for Hebrew LLMs fills this void by providing an organized and continuous means to evaluate and refine models fine-tuned or created for Hebrew. Rather than depending on English-preponderant benchmarks, the leaderboard employs tasks and datasets specific to Hebrew's structure and use cases. These include question answering, summarization, translation, sentiment analysis, and named entity recognition, among others—all done in Hebrew.

The leaderboard doesn't just evaluate accuracy. It looks at practical utility, too, by including metrics such as factuality, fluency, and contextual understanding in Hebrew. This allows researchers and developers to focus not only on performance but on real-world usability.

At its core, the leaderboard functions as a public-facing platform where contributors can submit their Hebrew LLMs for evaluation. Models are tested on a fixed set of Hebrew language tasks using standard datasets that are freely available and openly licensed. These tasks were selected based on input from Hebrew linguists, data scientists, and the open-source AI community in Israel and abroad.

Once a model is submitted, it is evaluated in a consistent, automated pipeline. The results are then published publicly on the leaderboard. Every model is scored across multiple dimensions, including precision, recall, F1 score, and BLEU for language generation tasks. But unlike many leaderboards that just show raw scores, this platform includes interpretive insights to help readers understand what those scores mean in the context of Hebrew.

For example, if a model performs well on Hebrew sentiment analysis but poorly on question answering, the breakdown helps pinpoint where improvement is needed. The goal isn’t just competition. It’s collaboration, learning, and shared progress. Developers can use the data to guide training, refine fine-tuning techniques, and explore where Hebrew LLMs struggle or succeed.

Importantly, all results are open. The leaderboard encourages full transparency by requiring contributors to disclose their training data, compute budget, and whether the model is open-source or proprietary. This helps ensure a level playing field while promoting reproducibility.

The Open Leaderboard for Hebrew LLMs is more than a ranking chart—it’s an ecosystem builder. By focusing on open contributions and shared resources, it hopes to encourage collaboration across universities, startups, and individual developers working with Hebrew text. The tools and infrastructure are built to support continuous improvement, and anyone can participate.

A key part of this community-driven effort is the open dataset initiative. Rather than relying on closed or commercial Hebrew corpora, the leaderboard draws from publicly available data: Wikipedia, Hebrew news sources with permissive licenses, religious texts, conversational datasets, and user-contributed text corpora. Each dataset is reviewed for balance, quality, and representation.

The leaderboard also welcomes feedback. It includes a way for users to flag evaluation concerns, propose new tasks, or report dataset issues. This makes it dynamic and responsive rather than static and outdated, which is often the case with one-time benchmarks.

Another benefit is inclusivity. Hebrew speakers span multiple communities—secular, religious, Mizrahi, Ashkenazi, Ethiopian, and more. Language use can vary by group and region. By supporting a range of data types and dialects, the leaderboard helps ensure that AI tools not only understand textbook Hebrew but also real-world Hebrew as it's spoken and written across different communities.

The launch of the Open Leaderboard for Hebrew LLMs marks a new phase in language AI development for non-English speakers. It creates a space where Hebrew models can be developed, tested, and improved with clear, shared goals. And because it’s open, it levels the playing field between large corporations and small research teams.

Already, a few early models have been submitted, and the leaderboard has started shaping the way researchers think about building AI tools for Hebrew. This includes better pre-training datasets, smarter tokenization methods for right-to-left languages, and new evaluation strategies that reflect the nuances of Hebrew communication.

In time, the leaderboard could become a central resource for developers creating everything from chatbots to educational software in Hebrew. It may also serve as a blueprint for leaderboards in other underrepresented languages that face similar challenges.

The Open Leaderboard for Hebrew LLMs is helping improve AI tools built for Hebrew by offering a public benchmark tailored to the language's unique structure. It highlights the growing need for better support of Hebrew in AI and invites contributions from researchers, developers, and language experts. By focusing on transparency and shared learning, it promotes collaboration rather than competition. This project is about more than just rankings—it's about making AI more accurate and useful for real Hebrew speakers. As participation grows, the leaderboard could gradually reshape how Hebrew language models are developed, tested, and applied in practical, everyday uses.

Advertisement

How PaliGemma 2, Google's latest innovation in vision language models, is transforming AI by combining image understanding with natural language in an open and efficient framework

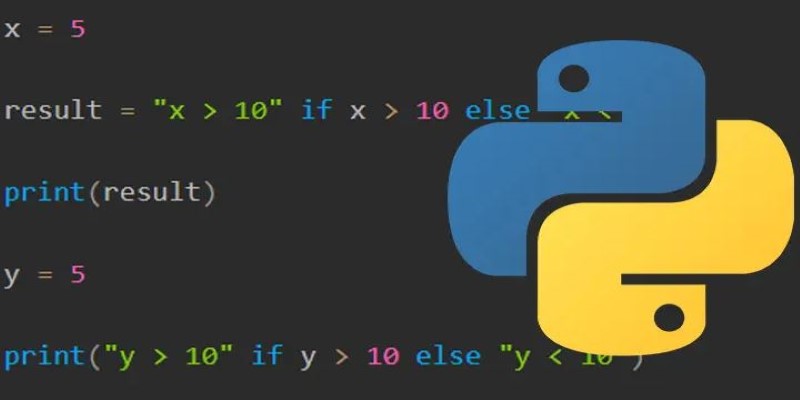

How to use the ternary operator in Python with 10 practical examples. Improve your code with clean, one-line Python conditional expressions that are simple and effective

How the apt-get command in Linux works with real examples. This guide covers syntax, common commands, and best practices using the Linux package manager

What is Auto-GPT and how is it different from ChatGPT? Learn how Auto-GPT works, what sets it apart, and why it matters for the future of AI automation

Learn how to run privacy-preserving inferences using Hugging Face Endpoints to protect sensitive data while still leveraging powerful AI models for real-world applications

How AutoGPT is being used in 2025 to automate tasks across support, coding, content, finance, and more. These top use cases show real results, not hype

How using Xet on the Hub simplifies code and data collaboration. Learn how this tool improves workflows with reliable data versioning and shared access

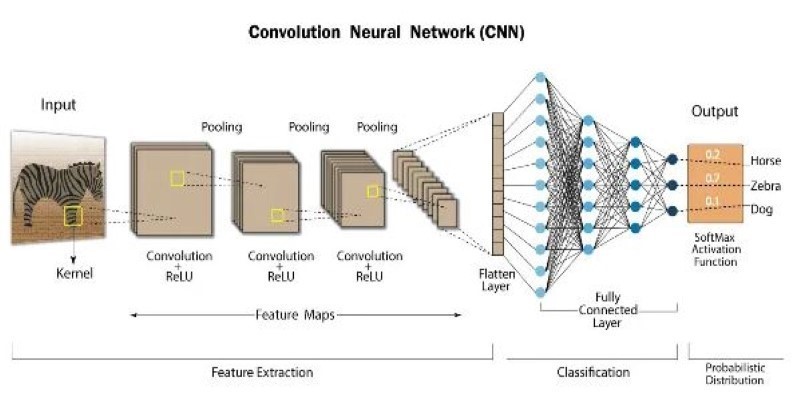

How atrous convolution improves CNNs by expanding the receptive field without losing resolution. Ideal for tasks like semantic segmentation and medical imaging

Need to save Python objects between runs? Learn how the pickle module serializes and restores data—ideal for caching, model storage, or session persistence in Python-only projects

How RLHF is evolving and why putting reinforcement learning back at its core could shape the next generation of adaptive, human-aligned AI systems

Looking for the best way to chat with PDFs? Discover seven smart PDF AI tools that help you ask questions, get quick answers, and save time with long documents

Anthropic secures $3.5 billion in funding to compete in AI with Claude, challenging OpenAI and Google in enterprise AI