Advertisement

There’s something oddly satisfying about watching a machine complete your sentence before you finish typing. Behind that quiet magic are large language models (LLMs). Most people hear names like ChatGPT or Claude, but Hugging Face is where you find the real inventory—raw, open-source, ready-to-use models that power everything from chatbots to content filtering systems. If you're looking for the best LLMs available right now on Hugging Face, here's a guide to ten of the most popular and useful ones.

LLaMA 2 is Meta’s answer to the need for open and efficient LLMs. Released with fewer restrictions than its predecessor, LLaMA 2 models are available in sizes like 7B, 13B, and 70B parameters. What makes them stand out is their balance of performance and resource usage. They're good for downstream tasks like summarization, Q&A, and classification, especially when you fine-tune them a bit. If you're running experiments on limited hardware, the 7B version is a good place to start.

Mistral 7B is one of those smaller models that punches way above its weight. Despite having 7 billion parameters, it competes with larger models, such as LLaMA 2-13B, in many tasks. It's fast, efficient, and performs surprisingly well in coding, reasoning, and multilingual benchmarks. The architecture uses grouped-query attention and sliding window techniques to cut down compute without losing depth. It's great for real-time applications where speed matters.

If you're looking for something huge, the Falcon 180B is one of the largest openly available models. It was released by the Technology Innovation Institute (TII) in the UAE and trained on a staggering 3.5 trillion tokens. The model is autoregressive and optimized for high-throughput inference. Its size makes it better suited for cloud environments or advanced research rather than small-scale hobby projects. It excels at long-form reasoning and detailed generation.

Mixtral is a mixture-of-experts model that works differently than most. It activates only a subset of its parameters (2 of 8 experts) for each input, which means you get a 12.9B model's brainpower but only use 2 experts at a time—roughly 12.9B compute instead of 65B. This setup leads to solid performance and faster inference. Mixtral is especially useful in situations where you need a wide skillset (e.g., coding, translation, creative writing) handled by one flexible model.

Phi-2 is a small model with just 2.7 billion parameters, but it’s surprisingly good at reasoning and math. Microsoft trained it using a "textbook-quality" dataset made up of curated academic and reasoning-focused content. While it’s not the best at casual conversation or fluff tasks, it handles logic problems and instruction following better than many larger models. If you need compact and focused performance, Phi-2 is a solid option.

GPT-NeoX-20B was one of the early open models that pushed the size boundaries. Built by EleutherAI, it uses the GPT-3 style decoder architecture with 20 billion parameters. It’s not the most efficient by today’s standards, but it's still used in many projects thanks to its open license and decent performance. Developers often fine-tune NeoX for specific business or academic use cases. It’s reliable, though you may need to tune or augment it for modern tasks.

Command R+ is designed with retrieval-augmented generation (RAG) in mind. That means it's good at pulling in external context and integrating it into answers. It’s open weights, optimized for RAG, and performs well across retrieval-heavy tasks like search, customer support, and knowledge management. It's newer, so you may not see it mentioned alongside giants like LLaMA, but it’s becoming a favorite in systems that use a lot of documents or structured knowledge.

Zephyr is Hugging Face’s own fine-tuned model series based on Mistral and LLaMA. It's specifically optimized for helpfulness, safety, and alignment with human expectations. The training process includes Reinforcement Learning from Human Feedback (RLHF), which helps Zephyr perform well in assistant-style roles. Zephyr models are often smaller (7B range) but highly optimized for response quality. If you want a model that acts more like an assistant out of the box, Zephyr is worth a look.

OpenChat is an ongoing project focused on creating open alternatives to ChatGPT using LLaMA and Mistral bases. The goal is to provide chat-style models that are as helpful and fluent as proprietary ones. The tuning methods include DPO (Direct Preference Optimization) and SFT (Supervised Fine-Tuning), aimed at aligning the model better with human intent. It’s one of the better choices for chatbot-style interfaces without using closed-source APIs.

Yi is one of the newer entrants and stands out for its strong multilingual abilities. Developed by 01.AI, the Yi-34B model performs especially well in Chinese, English, and other languages. It supports instruction-following, code generation, and content writing. Although it's large, it has been optimized for inference efficiency. Yi is gaining attention for its clean design, robust pretraining corpus, and balanced multilingual handling, making it a good pick for global use cases.

The world of large language models is moving fast, but Hugging Face is where most of the meaningful open work is happening. Whether you're experimenting, building apps, or looking for alternatives to closed systems, these ten models represent the current top tier. Each one has different strengths—some are small and fast, others huge and thorough. What matters is choosing the one that fits your use case and compute budget. You don't need a 70B model to get great results. Sometimes, a well-tuned 7B model like Mistral or Zephyr can do the job better, faster, and cheaper—especially for edge deployments or mobile-focused applications.

Advertisement

Need to save Python objects between runs? Learn how the pickle module serializes and restores data—ideal for caching, model storage, or session persistence in Python-only projects

How to use the ternary operator in Python with 10 practical examples. Improve your code with clean, one-line Python conditional expressions that are simple and effective

Multimodal models combine text, images, and audio into a shared representation, enabling AI to understand complex tasks like image captioning and alignment with more accuracy and flexibility

Ready to run powerful AI models locally while ensuring safety and transparency? Discover Gemma 2 2B’s efficient architecture, ShieldGemma’s moderation capabilities, and Gemma Scope’s interpretability tools

How RLHF is evolving and why putting reinforcement learning back at its core could shape the next generation of adaptive, human-aligned AI systems

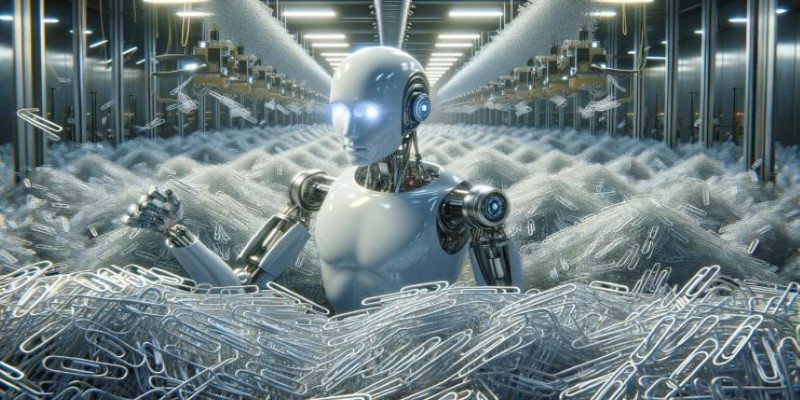

The paperclip maximizer problem shows how an AI system can become harmful when its goals are misaligned with human values. Learn how this idea influences today’s AI alignment efforts

How can Google’s Gemma 3 run on a single TPU or GPU? Discover its features, speed, efficiency and impact on AI scalability.

How the world’s first AI-powered restaurant in California is changing how meals are ordered, cooked, and served—with robotics, automation, and zero human error

Fond out the top AI tools for content creators in 2025 that streamline writing, editing, video production, and SEO. See which tools actually help improve your creative flow without overcomplicating the process

Microsoft’s new AI model Muse revolutionizes video game creation by generating gameplay and visuals, empowering developers like never before

Discover the next generation of language models that now serve as a true replacement for BERT. Learn how transformer-based alternatives like T5, DeBERTa, and GPT-3 are changing the future of natural language processing

Think Bash loops are hard? Learn how the simple for loop can help you rename files, monitor servers, or automate routine tasks—all without complex scripting